Butterfly Identification App

Role

During my undergraduate degree, there was a final capstone project during my final semester. This project involved finding a problem and designing a solution to this problem. I was part of a group with 2 classmates. Our group was partnered with a local start-up company whose goal was to develop an Android app which could identify a species of insect based on a picture of it. Our group was involved with developing a user-friendly Android app that would work with the insect identification already implemented by the start-up. My role in the group was to help with programming the Android app and the user interface as well as setting up some of the tools used to help with development.

Problem

The start-up company was looking to create a mobile app which would be able to identify an insect based on an image. The company was working with a butterfly conservatory to build a prototype of their idea to identify species of butterflies in the conservatory. The company already had a machine learning model that was able to identify different species of butterflies from an image. The next part of the prototype the company needed to complete was the app. The app needed to be easy to use and would also provide information about the identified species. The app was the part of the prototype my group and I was responsible for.

Solution

The group decided to focus on developing an Android app. There are many different versions of Android phones, and apps can behave differently depending on the version of Android being used. Because of this, the app was developed with multiple different Android phones used for testing to make sure the app could be used across as many phones as possible.

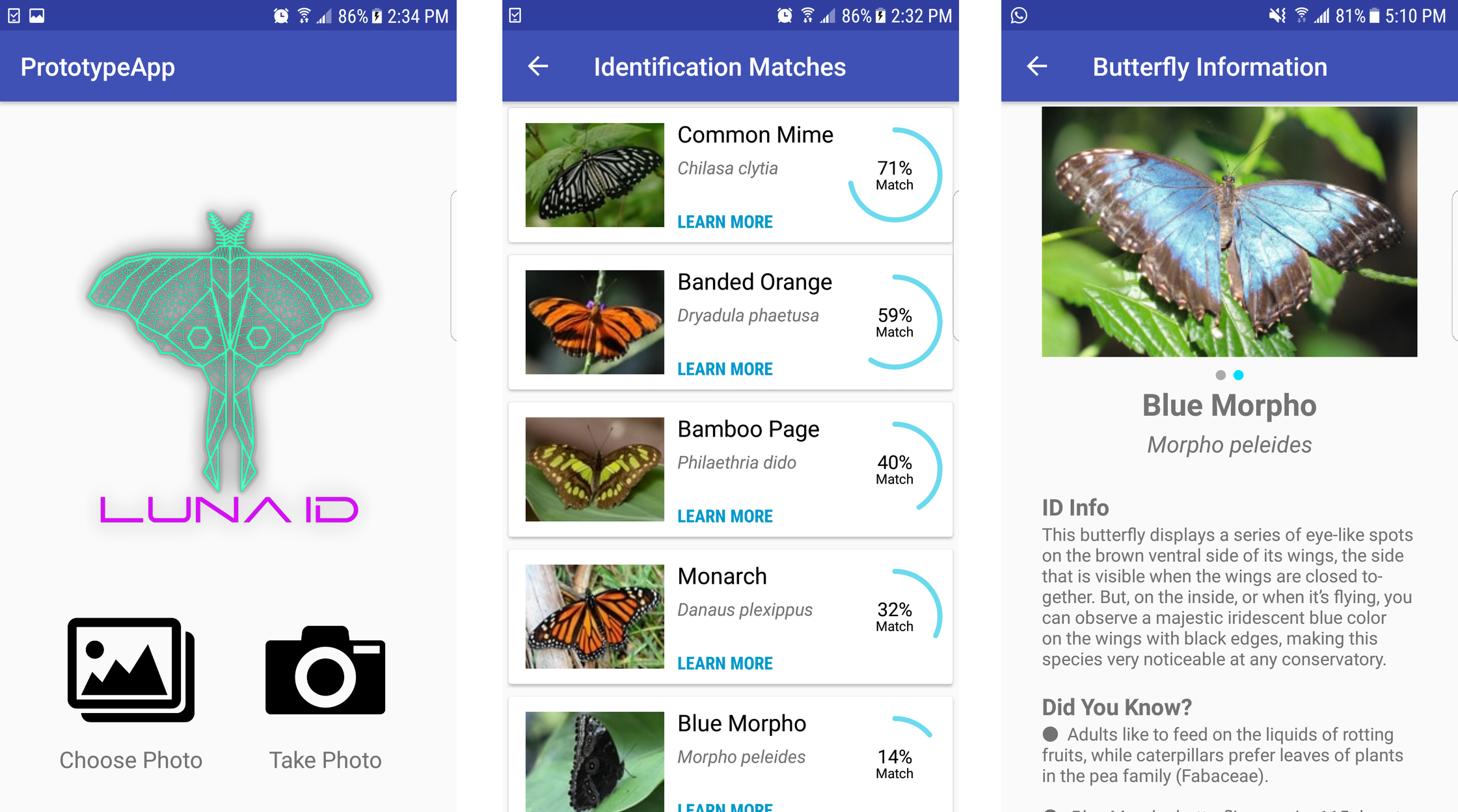

There were two main use cases to consider for the app. The first was to allow the user to take a picture from within the app. This would allow the user to open the phone’s camera in the app, take a picture, and this picture would be automatically sent to the machine learning model on a server. Once the model had a result, the app would display the possible species the butterfly in the image could be with a percentage of how much the image matches with the species in the database. The app then lets the user select a displayed species and displays additional images and information on the species.

The second use case was to allow the user to upload an image they already have on their phone. This would allow a user to identify a butterfly at anytime, as long as they have an image. Once the image is sent to the model and the results of the model are obtained, the rest of the app behaves the exact same way as if the user took a picture.

An example of the user interface of the app can be seen below.

Impact

The Android app was successfully developed and was on time for our presentation. I was able to get the app published onto the Google Play Store, which is where Android users can find and download apps. During the presentation we had multiple people come and use the demo phones we provided as well as people downloading the app. Additionally, the start-up company was very pleased with the app we provided them, and they were able to take the prototype we provided and develop it further.

The Google Play Store page for the app can be seen below.

Code/Demo/Further Information

The following video is a demonstration of how the final version of the app worked.